Training Course on Explainable AI (XAI) in Geospatial Decisions

Training Course on Explainable AI (XAI) in Geospatial Decisions directly addresses this critical need by focusing on Explainable AI (XAI) techniques tailored for geospatial decision-making, empowering professionals to build, understand, and communicate transparent and trustworthy AI solutions in a spatial context.

Course Overview

Training Course on Explainable AI (XAI) in Geospatial Decisions

Introduction

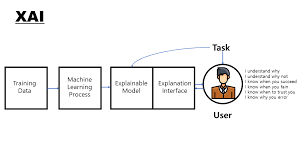

The proliferation of Artificial Intelligence (AI) and Machine Learning (ML) in geospatial analysis has revolutionized how we understand and interact with our world. From environmental monitoring to urban planning and disaster management, AI-driven insights from spatial data are becoming indispensable. However, the inherent "black-box" nature of many advanced AI models poses a significant challenge, hindering trust, accountability, and the ability to debug and improve these powerful systems. Training Course on Explainable AI (XAI) in Geospatial Decisions directly addresses this critical need by focusing on Explainable AI (XAI) techniques tailored for geospatial decision-making, empowering professionals to build, understand, and communicate transparent and trustworthy AI solutions in a spatial context.

This comprehensive program delves into the theoretical foundations and practical applications of XAI within the geospatial domain. Participants will gain hands-on experience with cutting-edge methodologies and tools to interpret complex geospatial AI models, identify biases, and ensure ethical deployment. By demystifying AI's decision-making processes, this course equips individuals and organizations with the knowledge and skills to foster greater confidence in AI-driven geospatial insights, facilitating more informed, responsible, and impactful decisions across diverse sectors.

Course Duration

10 days

Course Objectives

- Understand the core concepts of Explainable AI (XAI) and its critical role in enhancing model transparency and interpretability for geospatial applications.

- Apply advanced techniques like SHAP and LIME to identify the key spatial features driving AI model predictions in complex geospatial datasets.

- Develop proficiency in creating intuitive geovisualizations and interactive dashboards to communicate AI insights effectively to diverse stakeholders.

- Learn to identify and address algorithmic bias and fairness issues inherent in geospatial AI models, ensuring equitable outcomes.

- Understand the ethical and legal frameworks surrounding AI and XAI in geospatial contexts, including GDPR and other emerging regulations.

- Utilize XAI to identify vulnerabilities and improve the resilience and reliability of geospatial AI systems against adversarial attacks or data shifts.

- Leverage explainability insights to refine model architectures, feature engineering, and training strategies for superior predictive accuracy.

- Bridge the gap between human domain expertise and AI capabilities by fostering a deeper understanding of AI rationale in human-in-the-loop geospatial workflows.

- Explore and apply XAI techniques specifically designed for complex deep learning models in remote sensing and spatial analysis.

- Develop strategies for building and deploying responsible AI solutions that generate actionable insights with clear explanations for geospatial decisions.

- Investigate methods to move beyond correlation and understand the causal relationships identified by AI models in spatial phenomena.

- Solve practical problems in areas like smart cities, disaster management, environmental monitoring, and precision agriculture using XAI.

- Master the art of effectively translating technical XAI outputs into understandable narratives for non-technical audiences, fostering data storytelling.

Organizational Benefits

- Increased Trust and Adoption of AI

- Improved Decision-Making Quality.

- Enhanced Regulatory Compliance and Ethical AI.

- Faster Debugging and Model Improvement.

- Optimized Resource Allocation

- Competitive Advantage.

- Upskilling Workforce.

Target Audience

- Geospatial Analysts & Scientists.

- Data Scientists & Machine Learning Engineers

- Urban Planners & Policy Makers

- Environmental Researchers & Conservationists

- Disaster Management & Emergency Response Professionals

- Public Health & Epidemiology Specialists.

- AI/ML Researchers.

- Project Managers & Team Leads

Course Modules

Module 1: Foundations of Explainable AI (XAI) in Geospatial Context

- Introduction to AI/ML in Geospatial: The "Black Box" Problem and the Need for XAI.

- Key Concepts: Interpretability vs. Explainability, Transparency, Fairness, Accountability.

- Types of XAI: Model-Agnostic vs. Model-Specific, Local vs. Global Explanations.

- Ethical Considerations of AI in Geospatial Decisions: Bias, Privacy, and Societal Impact.

- Case Study: Understanding land-use change predictions for urban sprawl (e.g., Why did the model predict commercial development here instead of residential?).

Module 2: Geospatial Data for XAI Readiness

- Review of Geospatial Data Types: Raster (satellite imagery, DEMs), Vector (points, lines, polygons).

- Data Preprocessing for XAI: Feature Engineering, Normalization, Handling Spatial Autocorrelation.

- Geospatial Feature Importance: Identifying and ranking relevant spatial variables.

- Challenges of Geospatial Data in XAI: Scale, Resolution, Spatial Heterogeneity.

- Case Study: Preparing satellite imagery and socio-economic data for a poverty prediction model and understanding the most influential input features.

Module 3: Interpretable Machine Learning Models for Geospatial Analysis

- White-Box Models: Linear Regression, Logistic Regression, Decision Trees for Spatial Data.

- Visualizing Simple Model Decisions: Regression Coefficients, Decision Boundaries.

- Rule-Based Systems in Geospatial Contexts.

- Trade-offs: Interpretability vs. Predictive Power in Geospatial AI.

- Case Study: Using a spatial decision tree to classify vegetation types and easily trace the rules for each classification.

Module 4: Model-Agnostic XAI Techniques: LIME

- Introduction to LIME (Local Interpretable Model-agnostic Explanations): How it works.

- Generating Local Explanations for Individual Geospatial Predictions.

- Interpreting LIME Output for Geospatial Models.

- Limitations and Best Practices of LIME in Spatial Analysis.

- Case Study: Explaining why a specific parcel of land was identified as high-risk for flooding by a black-box model using LIME.

Module 5: Model-Agnostic XAI Techniques: SHAP

- Introduction to SHAP (SHapley Additive exPlanations): Game Theory Foundations.

- Calculating SHAP Values for Global and Local Geospatial Explanations.

- Visualizing SHAP Summaries, Dependence Plots, and Force Plots for Spatial Features.

- Interpreting Feature Interactions with SHAP in Geospatial Models.

- Case Study: Analyzing SHAP values to understand which environmental factors most strongly influence a deep learning model's prediction of crop yield in different agricultural fields.

Module 6: Global Explainability Methods for Geospatial AI

- Partial Dependence Plots (PDPs) and Individual Conditional Expectation (ICE) plots for Spatial Variables.

- Accumulated Local Effects (ALE) Plots for Robust Global Interpretations.

- Surrogate Models: Training Interpretable Models to Mimic Complex Geospatial AI.

- Feature Interaction Analysis for Geospatial Contexts.

- Case Study: Using PDPs to understand the global relationship between elevation and a landslide prediction model's output across an entire region.

Module 7: Explainable Deep Learning for Geospatial Imagery

- Challenges of XAI in Deep Learning: CNNs, RNNs for Remote Sensing.

- Saliency Maps and Class Activation Maps (CAM/Grad-CAM) for Image Explanations.

- Visualizing Feature Activations and Latent Spaces in Geospatial Deep Learning.

- Adversarial Examples and Robustness in Geospatial Deep Learning.

- Case Study: Explaining why a convolutional neural network (CNN) classified a specific area in satellite imagery as deforestation by highlighting contributing pixels.

Module 8: Geospatial Bias and Fairness in AI

- Sources of Bias in Geospatial Data and AI Models: Data Collection, Representation.

- Metrics for Quantifying Fairness in Geospatial Decision-Making.

- Techniques for Debiasing Geospatial AI Models (Pre-processing, In-processing, Post-processing).

- Auditing Geospatial AI Systems for Unfair Outcomes.

- Case Study: Identifying and mitigating demographic bias in a geospatial model used for allocating public resources in urban areas.

Module 9: Responsible AI and AI Governance in Geospatial Applications

- Principles of Responsible AI: Accountability, Transparency, Fairness, Safety.

- Regulatory Landscape for AI in Geospatial: GDPR, AI Acts, Ethical Guidelines.

- Developing AI Governance Frameworks for Geospatial Organizations.

- Building AI Ethics Committees and Best Practices.

- Case Study: Developing a governance framework for an AI system used in disaster risk assessment to ensure ethical deployment and decision-making.

Module 10: Human-Centric XAI for Geospatial Stakeholders

- Designing Explanations for Different Audiences: Technical Users, Domain Experts, Public.

- Interactive XAI Tools and Dashboards for Geospatial Data Exploration.

- User Studies and Human-in-the-Loop Feedback for XAI Improvement.

- The Role of Trust and Confidence in Human-AI Collaboration.

- Case Study: Creating an interactive dashboard for urban planners to explore and understand the rationale behind an AI-driven zoning recommendation.

Module 11: Counterfactual Explanations and Causal XAI in Geospatial

- Introduction to Counterfactual Explanations: "What if" scenarios for Geospatial Decisions.

- Generating Actionable Recommendations from Counterfactuals.

- Causal Inference for Understanding Geospatial Phenomena with AI.

- Integrating Causal Models with Explanations.

- Case Study: Providing counterfactual explanations for a model predicting agricultural land degradation: What changes in irrigation or fertilizer use would have prevented this degradation?

Module 12: Advanced Topics in Geospatial XAI

- Concept Bottleneck Models (CBMs) for Interpretable Deep Learning.

- XAI for Graph Neural Networks (GNNs) in Spatial Networks (e.g., transportation).

- Explanation of Time-Series Geospatial Models.

- Uncertainty Quantification in XAI Explanations.

- Case Study: Explaining the decisions of a GNN model used for traffic flow optimization in a city network.

Module 13: Implementing XAI Pipelines in Geospatial Workflows

- Integrating XAI Tools into Existing GIS and Data Science Pipelines (Python libraries: shap, lime, eli5).

- Model Monitoring and Drift Detection with Explainability.

- Automating XAI Reports and Documentation.

- Deploying Explainable Geospatial AI Models.

- Case Study: Building an end-to-end pipeline for a wildfire prediction model that includes automated XAI reporting for fire management agencies.

Module 14: Case Studies and Best Practices in Geospatial XAI

- Deep Dive into successful XAI implementations across various geospatial domains.

- Lessons Learned and Common Pitfalls in XAI Projects.

- Developing a Comprehensive XAI Strategy for Geospatial Organizations.

- Benchmarking and Evaluating XAI Techniques in Practice.

- Case Study: Analyzing a successful XAI implementation in precision agriculture, where farmers can understand yield predictions and nutrient recommendations.

Module 15: Future Trends and Research in Geospatial XAI

- Emerging XAI Techniques and Research Directions.

- Neuro-Symbolic AI and its potential for Geospatial Explainability.

- The Role of Large Language Models (LLMs) in Generating Geospatial Explanations.

- Explainability for Foundation Models in Geospatial AI.

- Open Challenges and Opportunities in the Field.

- Case Study: Discussing the potential of new XAI techniques to interpret and refine climate change models for local policy interventions.

Training Methodology

- Instructor-Led Sessions

- Practical Hands-on.

- Case Studies & Discussions

- Group Projects & Collaborative Learning.

- Q&A and Troubleshooting Sessions.

- Interactive Demonstrations

- Resource Sharing.

Register as a group from 3 participants for a Discount

Send us an email: info@datastatresearch.org or call +254724527104

Certification

Upon successful completion of this training, participants will be issued with a globally- recognized certificate.

Tailor-Made Course

We also offer tailor-made courses based on your needs.

Key Notes

a. The participant must be conversant with English.

b. Upon completion of training the participant will be issued with an Authorized Training Certificate

c. Course duration is flexible and the contents can be modified to fit any number of days.

d. The course fee includes facilitation training materials, 2 coffee breaks, buffet lunch and A Certificate upon successful completion of Training.

e. One-year post-training support Consultation and Coaching provided after the course.

f. Payment should be done at least a week before commence of the training, to DATASTAT CONSULTANCY LTD account, as indicated in the invoice so as to enable us prepare better for you.