Training Course on 3D Computer Vision and Point Cloud Processing

Training Course on 3D Computer Vision & Point Cloud Processing focuses on practical applications and hands-on experience, bridging the gap between theoretical concepts and real-world challenges in 3D perception

Course Overview

Training Course on 3D Computer Vision & Point Cloud Processing

Introduction

The rapidly evolving landscape of 3D data acquisition and analysis is revolutionizing industries from autonomous vehicles and robotics to architecture and manufacturing. This intensive training course delves into the critical domain of 3D Computer Vision and Point Cloud Processing, equipping participants with the essential skills to effectively work with complex 3D datasets generated by cutting-edge sensors like LiDAR and depth cameras. Understanding and manipulating these rich spatial representations are paramount for developing intelligent systems that can perceive, interpret, and interact with the real world in three dimensions, driving innovation across various high-tech sectors.

Training Course on 3D Computer Vision & Point Cloud Processing focuses on practical applications and hands-on experience, bridging the gap between theoretical concepts and real-world challenges in 3D perception. Participants will gain expertise in point cloud registration, segmentation, feature extraction, 3D reconstruction, and deep learning for 3D data. By mastering these techniques, attendees will be empowered to develop robust solutions for spatial AI, digital twins, object recognition, and environmental mapping, unlocking new possibilities for automation, quality control, and advanced analytics in the era of Industry 4.0.

Course Duration

10 days

Course Objectives

- Understand the principles and methodologies of capturing 3D point clouds using LiDAR sensors, stereo cameras, and RGB-D depth cameras.

- Develop expertise in noise reduction, outlier removal, downsampling, and data cleaning for raw 3D data.

- Gain practical skills in aligning multiple point clouds from different viewpoints using both classical and deep learning-based registration algorithms.

- Learn to segment 3D point clouds into meaningful objects and regions using clustering algorithms and semantic segmentation techniques.

- Identify and extract key geometric features and descriptors from point clouds for object recognition and scene understanding.

- Understand and apply techniques for generating 3D meshes and surface models from point cloud data.

- Explore and implement deep neural networks specifically designed for 3D point cloud classification, segmentation, and object detection (e.g., PointNet, PointNet++).

- Learn to fuse LiDAR and camera data for enhanced 3D perception and improved accuracy in applications like autonomous driving.

- Become proficient in using industry-standard libraries such as Open3D and PCL (Point Cloud Library) for 3D data processing.

- Understand the challenges and strategies for building efficient and real-time 3D computer vision systems.

- Apply acquired knowledge to address real-world challenges in robotics navigation, industrial inspection, and AR/VR applications.

- Learn metrics and methodologies for quantitatively assessing the accuracy and efficiency of 3D computer vision algorithms.

- Stay abreast of the latest advancements in neural rendering, Gaussian Splatting, and large 3D models.

Organizational Benefits

- Empowering teams with cutting-edge 3D computer vision skills directly translates to developing innovative products and services, fostering a competitive edge in sectors like autonomous systems, smart manufacturing, and spatial analytics.

- Automation of critical tasks such as quality inspection, asset management, and environmental monitoring through advanced 3D data processing leads to significant operational efficiencies and cost savings.

- The ability to extract rich, actionable insights from 3D point cloud data enables more informed strategic planning and better decision-making across various departments.

- Equipping engineers and researchers with the necessary tools and techniques for 3D perception accelerates prototyping and deployment of new AI-powered solutions.

- Investing in training for 3D computer vision and point cloud processing enhances employee skill sets, boosting morale and aiding in the retention of valuable technical talent.

- Unlocking the potential of 3D data opens doors to new business models and revenue opportunities, particularly in areas like digital twin creation, 3D mapping, and virtual reality solutions.

Target Audience

- Software Engineers and Developers

- Research Scientists and Academics in computer vision, robotics, geomatics, or related fields.

- Data Scientists and AI/ML Engineers.

- Robotics Engineers and Autonomous Vehicle Developers

- GIS Professionals and Surveyors

- Manufacturing Engineers and Quality Control Specialists seeking to implement 3D inspection solutions.

- Architects, Civil Engineers, and Construction Professionals utilizing 3D scanning and BIM (Building Information Modeling).

- Anyone seeking a comprehensive understanding and practical skills in 3D computer vision and point cloud processing.

Course Outline

Module 1: Fundamentals of 3D Data and Sensors

- Introduction to 3D Space: Coordinate systems, transformations, and homogeneous coordinates.

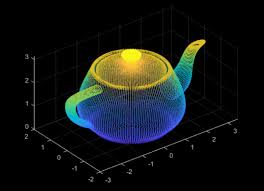

- Overview of 3D Data Representations: Point clouds, meshes, voxels, and implicit surfaces.

- LiDAR Technology: Principles, types (terrestrial, airborne, mobile), and data characteristics.

- Depth Cameras: RGB-D sensors (Kinect, RealSense) and their operational principles.

- Case Study: Analyzing LiDAR data for urban planning to identify building footprints and vegetation density.

Module 2: Point Cloud Acquisition and Data Formats

- Practical aspects of 3D data capture using various sensors.

- Understanding common point cloud file formats: .pcd, .ply, .las, .e57.

- Loading, saving, and basic manipulation of point cloud data in Python.

- Introduction to Open3D and PCL for data handling.

- Case Study: Capturing and processing point clouds of an industrial assembly line for quality inspection using a structured light scanner.

Module 3: Point Cloud Pre-processing: Cleaning and Normalization

- Noise filtering techniques: Statistical outlier removal, bilateral filtering.

- Downsampling strategies: Voxel grid downsampling, farthest point sampling.

- Normal estimation for point clouds: Understanding surface orientation.

- Data cleaning for real-world noisy sensor data.

- Case Study: Cleaning raw LiDAR scans of a construction site to remove noise from dust and reflections, preparing data for progress monitoring.

Module 4: Geometric Transformations and Alignment

- Rigid transformations: Translation, rotation, and scaling.

- Introduction to the Iterative Closest Point (ICP) algorithm for point cloud registration.

- Variants and optimizations of ICP (e.g., Point-to-Plane ICP).

- Global registration techniques (e.g., RANSAC for pose estimation).

- Case Study: Registering multiple scans of a complex mechanical part from different angles to create a complete 3D model for reverse engineering.

Module 5: Point Cloud Segmentation: Classical Methods

- Segmentation based on geometric features: Planar segmentation, cylinder fitting.

- Clustering algorithms: Euclidean clustering, DBSCAN for object separation.

- Connected components analysis for identifying distinct objects.

- Challenges in segmenting unstructured point clouds.

- Case Study: Segmenting individual trees from a large forest LiDAR scan for forestry management and biomass estimation.

Module 6: Point Cloud Segmentation: Deep Learning Approaches

- Introduction to deep learning architectures for point clouds.

- PointNet and PointNet++: Direct processing of raw point clouds.

- Convolutional Neural Networks (CNNs) for voxelized 3D data.

- Graph Neural Networks (GNNs) for point cloud analysis.

- Case Study: Semantic segmentation of an indoor scene point cloud to classify furniture, walls, and floors for robotic navigation.

Module 7: Feature Extraction and Description for 3D Data

- Local feature descriptors: FPFH, SHOT, 3D SIFT.

- Global feature descriptors for scene recognition.

- Learning-based feature extraction for robust matching.

- Applications in object recognition and 3D matching.

- Case Study: Developing a system to recognize specific machinery components in an industrial setting using extracted 3D features for automated inventory.

Module 8: 3D Reconstruction from Point Clouds

- Surface reconstruction algorithms: Poisson reconstruction, ball pivoting.

- Mesh generation from unorganized point clouds.

- Texture mapping and colorization of 3D models.

- Evaluating the quality of reconstructed surfaces.

- Case Study: Reconstructing a 3D model of a historical monument from drone-captured point cloud data for preservation and virtual tourism.

Module 9: Object Detection and Tracking in 3D

- 3D bounding box prediction for object localization.

- Deep learning frameworks for 3D object detection (e.g., VoteNet, SECOND).

- Multi-object tracking in 3D point cloud sequences.

- Challenges in real-time 3D object detection and tracking.

- Case Study: Detecting and tracking pedestrians and vehicles in autonomous driving scenarios using LiDAR and camera fusion.

Module 10: Sensor Fusion: LiDAR and Camera Integration

- Camera calibration and LiDAR-camera extrinsic calibration.

- Projecting 3D points onto 2D images.

- Integrating depth information from LiDAR with rich visual features from cameras.

- Fusion strategies for improved perception: early, late, and mid-level fusion.

- Case Study: Enhancing object detection accuracy for autonomous robots by fusing high-resolution camera images with precise LiDAR depth measurements.

Module 11: Advanced Topics in 3D Computer Vision

- Neural Radiance Fields (NeRFs) and neural rendering.

- Gaussian Splatting for real-time 3D reconstruction and rendering.

- Large-scale point cloud processing and optimization.

- Point cloud compression techniques.

- Case Study: Generating photorealistic 3D environments for virtual reality training simulations using NeRF technology.

Module 12: Applications in Robotics and Autonomous Systems

- SLAM (Simultaneous Localization and Mapping) with 3D data.

- Path planning and obstacle avoidance using point clouds.

- Robotic manipulation and grasping based on 3D perception.

- Autonomous navigation in complex environments.

- Case Study: Implementing a SLAM system for an indoor autonomous mobile robot using a 3D LiDAR sensor to create a map and localize itself.

Module 13: Applications in Industrial Inspection and Quality Control

- Automated defect detection using 3D point cloud analysis.

- Dimensional metrology and tolerance checking with 3D scanners.

- Reverse engineering and manufacturing process optimization.

- Robotic guidance for precision assembly.

- Case Study: Detecting manufacturing defects on car body panels by comparing scanned 3D point clouds with a CAD model.

Module 14: Applications in AR/VR and Digital Twins

- Creating immersive augmented reality experiences with real-time 3D tracking.

- Building virtual reality environments from scanned real-world data.

- Digital twin creation for smart cities and industrial facilities.

- Interacting with 3D models in AR/VR applications.

- Case Study: Developing a digital twin of a factory floor for real-time monitoring of machinery and predictive maintenance.

Module 15: Practical Implementation and Project Work

- Setting up a development environment (Python, Open3D, PCL, PyTorch/TensorFlow).

- Guided hands-on projects applying learned concepts.

- Troubleshooting common issues in 3D data processing.

- Best practices for developing robust 3D computer vision pipelines.

- Case Study: Participants will work on a capstone project, such as building a simple 3D object detection system for a custom dataset or developing a point cloud registration application for mobile scanning.

Training Methodology

This course employs a blended learning approach, combining theoretical foundations with extensive practical application.

- Interactive Lectures: Engaging presentations covering core concepts, algorithms, and trending methodologies.

- Hands-on Coding Sessions: Guided exercises and labs using Python, Open3D, PCL, and deep learning frameworks (PyTorch/TensorFlow). Participants will work with real and synthetic 3D datasets.

- Practical Demonstrations: Live demonstrations of 3D data acquisition, processing pipelines, and application development.

- Case Study Analysis: In-depth examination of real-world industry applications and problem-solving scenarios, fostering critical thinking and problem-solving skills.

- Individual and Group Projects: Opportunities for participants to apply learned concepts to solve challenging 3D computer vision problems, culminating in a capstone project.

- Q&A and Discussion Forums: Dedicated time for addressing participant questions and fostering collaborative learning.

- Expert-Led Instruction: Taught by experienced professionals and researchers in the field of 3D Computer Vision.

Register as a group from 3 participants for a Discount

Send us an email: [email protected] or call +254724527104

Certification

Upon successful completion of this training, participants will be issued with a globally- recognized certificate.